Character encoding is a really tricky thing to get your head around. You think in terms of characters usually and as a programmer, that serves you well since everything you type will almost certainly from the standard latin alphabet (i.e. a-z, A-z, 0-9 with punctuation marks). It is this set of characters which was first available for use in computers back in the day, but now that computing is worldwide, we needed a means to display characters from all the world’s many languages.

You will no doubt end up dealing with characters (or more likely strings of characters) if you interface with public services where people themselves create the content such as Twitter or Facebook. In this post I aim to explain all you need to know about character encoding from the perspective of an iOS (or Mac) developer.

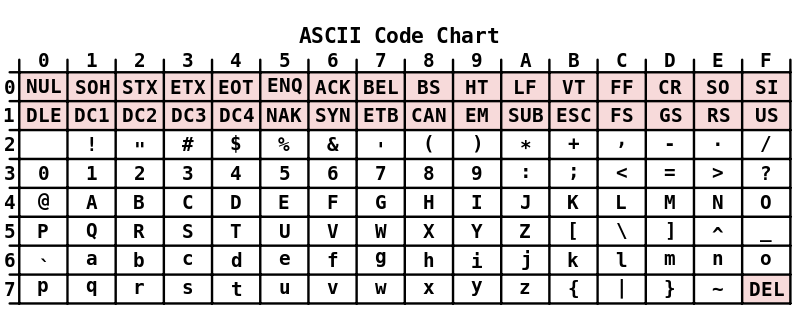

In the beginning there was ASCII

One of the first things computers needed to be able to do was to store, print and communicate text. This led to the invention of a standard way of encoding text such that all computers could talk to each other and understand each other and it was called ASCII. In short, ASCII is a 7-bit encoding which can therefore encode a total of 128 different characters which is enough to cover the standard latin alphabet. Each character is given a different value including some control characters such as the new line or tab characters. There’s other control characters which I won’t go into, but if you want then you can read more about them over at Wikipedia. Here’s a table of those characters:

The column represents the lower 4 bits and the row represents the upper 3 bits. So for example W is 0x57. This is all well and good but what about other languages’ characters then? What about all those Chinese, Japanese and chickens (yep, you may want to display this “character” – 🐔) out there?! Well that’s where unicode comes in!

Along comes Unicode

Unicode solved the problem of needing to represent more than just the latin set of characters. It aims to define every possible character you could ever want to represent. Each of these characters is called a code-point and each has a unique 32-bit number to represent it within the unicode space. For instance here are some of the code points:

- A = U+41

- b = U+62

- ! = U+21

- 三 = U+4E09

- 💩 = U+1F4A9

- 🐔 = U+1F414

If you look back up to the ASCII table, you’ll notice a distinct similarity between the code-point value of the first 3 (the only ones contained within ASCII) and their ASCII value. The is because all ASCII characters are placed within the Unicode space at their ASCII values, to aid backwards compatibility.

Character Encoding

The Unicode standard also defines different standard character encodings. These are crucial standards so that computers can talk to one another and information can be exchanged. They define the way in which you represent in binary, each code-point. ASCII itself is an encoding. So if you wanted to send the word Hello you would send 01001000 01100101 01101100 01101100 01101111 down the wire.

NB: This is slightly simplified with regard ASCII. There are a few different variants of it. See Wikipedia for further information.

However, this encoding only supports the ASCII range and not the full unicode range. That’s where UTF-8 and friends come in…

UTF-8

UTF-8 is a character encoding that can represent the full Unicode range. It does this by using variable width blocks to represent single codepoints. It does this by adopting a clever mechanism whereby the first bits of each byte can be examined to determine how wide the block is, according to the following table:

| First byte | Width |

|---|---|

0xxxxxxx |

1 |

110xxxxx |

2 |

1110xxxx |

3 |

11110xxx |

4 |

111110xx |

5 |

1111110x |

6 |

All subsequent bytes in a block start with 10. So for example a block of width 3 would be 1110xxxx 10xxxxxx 10xxxxxx. All the x’s in that are then up for grabs to represent a codepoint. So for blocks of width 3, codepoints up to 16 bits can be represented – i.e. up to U+FFFF.

If you look carefully you will notice that UTF-8 is entirely compatible with ASCII. This means that if there’s a document encoded in ASCII, then a reader configured to read as UTF-8 will parse it absolutely fine. That’s useful isn’t it!

As an example, consider the phrase Hello 🐔三💩. Let’s try to work out how that should be encoded:

| Char | Codepoint | UTF-8 Binary | UTF-8 Hex |

|---|---|---|---|

| H | U+48 | 01001000 | 0x48 |

| e | U+65 | 01100101 | 0x65 |

| l | U+6C | 01101100 | 0x6C |

| l | U+6C | 01101100 | 0x6C |

| o | U+6F | 01101111 | 0x6F |

| <space> | U+20 | 00100000 | 0x20 |

| 🐔 | U+1F414 | 11110000 10011111 10010000 10010100 | 0xF0 0x9F 0x90 0x94 |

| 三 | U+4E09 | 11100100 10111000 10001001 | 0xE4 0xB8 0x89 |

| 💩 | U+1F4A9 | 11110000 10011111 10010010 10101001 | 0xF0 0x9F 0x92 0xA9 |

Considering the chicken, U+1F414, look at the UTF-8 binary:

11110000 10011111 10010000 10010100

Removing all of the prefix bits from each byte you end up with:

000 011111 010000 010100

As you’ll see, this is 0x1F414, i.e. the codepoint for the chicken.

Well that’s UTF-8 for you. Pretty simple eh? There’s really not much more to it than that, but if you do want to read up about some more subtleties then check out Wikipedia.

Now onto some more encodings.

UTF-16

UTF-16 is another encoding in the same range as UTF-8, however each and every block in UTF-16 is exactly 2 bytes, 16 bits. This means it is trivial to encode all the codepoints up to U+FFFF but what about codepoints above that you may ask. Well, that’s where the concept of surrogate pairs comes in. These are special pairs of codepoints which when put next to each other represent a higher codepoint. The process goes like this:

- Subtract

0x10000from the codepoint. - Consider the remainder as a 20-bit number.

- Of the remaining bits of the codepoint, add

0xD800to the top ten bits. This becomes the first part of the surrogate pair. - Add

0xDC00to the bottom ten bits. This becomes the second part of the surrogate pair.

For example, consider the 🐔 character again. This is codepoint U+1F414. So subtracting 0x10000 leaves 0xF414. The top ten bits are 0000111101 which is 0x3D leading to a first surrogate of 0xD83D. The bottom ten bits are 0000010100 which is 0x14 leading to a second surrogate of 0xDC14. So in UTSF-14, the chicken character is encoding as 0xD83D 0xDC14.

You may now be wondering what happens to a codepoint such as 0xD800 in UTF-16. Well, that’s why the range U+D800 to U+DFFF is reserved in Unicode, so that UTF-16 can use those values for this surrogate pairs scheme.

What now?

So why should you care about all this as an iOS developer? Well, you just should. You will very likely at some point in your iOS development get data in from a web service. This is highly likely to be encoding using something like JSON, which just so happens to be a text based encoding. The text itself is of course encoded using a character encoding. The bytes will usually come down the wire to you and pop up in your app as an instance of NSData. You’ll then turn it into a string using NSString’s initWithData:encoding: method. And notice the 2nd parameter – encoding! That’s where you need to know what the encoding is. Although, in reality, you usually just assume UTF-8 since that is by far the most common encoding. Of course really you should work it out properly using something like the Content-Type HTTP header field on the response if using HTTP to transfer the data.

Well, I’d really love to explain more stuff about this, but I don’t have the time right now. Hopefully I’ll get a chance soon to give some more examples and flesh this post out.